My experiments with “Amazon Bedrock” — tips to get engineering college admissions using GenAI for your kid, text summarization, generating cool images and more ;-)

In the software/IT world, I believe there are some technologies which are “gamechangers” and become a defining moment, with their impact felt for many years/decades to come .. Technologies like Java, Linux, rise of NoSQL databases, Public Cloud and more recently, Generative AI have had such an impact. Like many others in the tech industry, I am also constantly learning/un-learning these new technologies to keep pace with what the industry and our customers want to build.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon with a single API, along with a broad set of capabilities you need to build generative AI applications, simplifying development while maintaining privacy and security.

On 28th September 2023, AWS announced the general availability of Amazon Bedrock which also included the awesome and surprise news that Bedrock along with the foundation models (FMs) from leading AI companies, like AI21 Labs, Anthropic, Cohere, Stability AI, Amazon , it will also have Meta’s Llama 2 13B and 70B parameter models, which will soon be available on Amazon Bedrock !!

There are enough blogs and documentation on Amazon Bedrock on the AWS website as well as other websites which cover a lot of the details about this new service, please do see the section on resources at the end of the blog. In this blog, as always I will cover some of the quick experimentation that I cooked up to get myself familiar with Amazon Bedrock, leveraging some of its key strengths.

Note: These are my personal thoughts, please refer to the Bedrock website and documentation for the latest information. You will also incur charges when you set these AWS services up in your AWS account, please make sure you turn them off or delete them, when you no longer need them. Again, these are experiments, and please treat them as such.

In my (limited) experience, as of date, in working with customers, some of the key use-cases where Generative AI is being used are in the areas of :

- Text Generation — Generating text using large language models results in productivity gains.

- Text Summarization — involves extracting the most relevant information from a text document and presenting it in a concise and coherent format. I will showcase two simple scenarios of getting a text summarization done for a recent Government of India legislation document, DIGITAL PERSONAL DATA PROTECTION ACT, 2023 which was around 21 pages long and I will also try to summarize my own resume ;-)

- Question answering — involves extracting answers to factual queries posed in natural language. If you rely on the data that the model was trained on, this will not be useful for companies that need to query domain specific and proprietary data and use the information to answer questions. This can be overcome by using Retrieval Augmented Generation (RAG) technique. RAG combines the use of embeddings to index the corpus of the documents to build a knowledge base and the use of an LLM to extract the information from a subset of the documents in the knowledge base. Again, I will try a usecase which probably can help budding parents to get a legup in selecting the right engineering colleges for their kids by leveraging Amazon Bedrock 🤣

- Conversational interface with chatbots — can be used to enhance the user experience for your customers.

- Image generation — can be used for creating realistic and artistic images of various subjects, environments, and scenes from language prompts

- finally, code generation — while we have an awesome service called Amazon CodeWhisperer as a coding companion, we can also use the foundational models in Bedrock for code generation work like generate code and SQL queries, explain code in different programming languages and translate code from one programming language to another.

I will use my own quirky use-cases (🙂) to quickly experiment some of the above concepts with Amazon Bedrock. My examples are meant to help me get upto speed, please do your own proof of concepts with your data.

While Amazon Bedrock is serverless, there are some key parameters which can control the output response from the foundational models. Please note that the usage of these parameters will vary based on the models being used.

Key parameters that can help in tweaking the response from the LLM’s:

The Temperature parameter allows the model to be more “creative” when constructing a response. A temperature of 0 means no randomness — the most likely words are chosen each time. To get more variability in responses, you can set the Temperature value higher and run the same request several times.

The Response length parameter determines the number of tokens to be returned in the response. You can use this to shorten or increase the amount of content returned by the model. If you set the length too low, you might cut off the response before it is completed.

Please check out https://docs.aws.amazon.com/bedrock/latest/userguide/model-parameters.html for more details on the above parameters

“Token” is a key element that is used by LLM’s for input and output, which is used by an LLM to process and generate language. A token is comprised of a few characters and refers to the basic unit that a model learns to understand user input and prompt to generate results. A token ≠ a word, an approximate thumb rule is 1 token ~= 4 chars in English/100 tokens ~= 75 words. If you look at Bedrock pricing, tokens play a large part and hence it is important for you to understand Tokens.

Question answering using Retrieval Augment generations (RAG)

A foundational base model is trained with a point in time data, and sometimes when you need to work with new data, we will need a different approach. There are two approaches to use new data with a base model

- Fine-tuning the model, to improve the model by retraining with the new data but this can be expensive

- Using a RAG approach — this will retrieve information most relevant to the user’s request from the enterprise knowledge base or content, bundles it as a context along with the user’s request as a prompt, and then sends it to the LLM to get a GenAI response. Please see this blog for more details on the use of a vector datastores in Generative AI applications and the various options. In terms of native AWS service options for use of a vector datastore, you have the choice of using Amazon Kendra, Amazon Aurora PostgreSQL with pgvector, Amazon OpenSearch or Amazon RDS for PostgreSQL.

My usecase — Our daughter just entered an engineering college in Bengaluru, and some of you may know, that I have published a couple of blogs over the last two years, on leveraging some of the AWS services like Amazon Kendra while she was in the 10th grade and 12th grade !! As any parent in India knows, it is extremely competitive environment for kids to get into good engineering and medical colleges in India. There are multiple entrance exams at the state and central level in India like JEE (for getting into IITs, NITs, IIITs etc), BITSAT (to the popular Birla Institute of technology) and state level exams, in our case it was Karnataka, which has two primary entrance exams — CET and COMEDK. In our case, we had to navigate this maze of data for each of these exams manually, by maintaining excel sheets for the various cutoffs required for the college admissions. After this harrowing experience, I wondered, with the launch of Bedrock, and using the RAG approach, can parents get a more easier approach to gather more intelligence on the various colleges and the cutoffs and be better prepared?

As always with AWS, I found an awesome solution published on the AWS GitHub which I could deploy to test my hypothesis !! This solution gives multiple options for both foundational models as well as for the vector data stores. Setting up this LLM RAG based chatbot leveraging Amazon Bedrock and an LLM like Anthropic Claude with Amazon Kendra as the vector database was a breeze. I then uploaded a bunch of the cutoff documents with the previous years cutoffs from BITSAT, COMEDK and Karnataka CET into the Kendra document repository and then started asking the questions !! I was amazed at some of the responses, I am sure that I can improve on the prompts that I used and should better utilize other prompt engineering techniques as well ..

Some screenshots from the chatbot, as well as the responses for my queries based on the data for engineering seats from the past BITSAT, COMEDK cutoffs 🙂

The above solution, can be leveraged for other real world business problems and personal use-cases as well !! Thank you, Bigad Soleiman and Sergey Pugachev for this awesome LLM based chatbot solution.

Document Summarization

Get summaries of long documents such as articles, reports, and even books to quickly get the gist using various Foundation Models on Amazon Bedrock.

I used this simple app from the Amazon Bedrock workshop to setup a simple document summarizer with Amazon Bedrock and using the frameworks - LangChain, and Streamlit.

For testing the summarizer functionality, I used a 21 page document on the new and very important piece of legislation in India — “THE DIGITAL PERSONAL DATA PROTECTION ACT, 2023 from https://www.meity.gov.in/writereaddata/files/Digital%20Personal%20Data%20Protection%20Act%202023.pdf to get a summary, which was a pretty good summary of the act.

I also wanted to test another fun usecase, which can also be useful in the real-world for companies who need to get summaries of hundreds/thousands of resumes that may have been shared with their recruitment teams by job seekers !! I tested with my own resume to get a summary and got the following result:

You can switch LLM and check out the different responses in the sample app:

For using AI21 Jurassic 2:

model_kwargs = { #AI21

"maxTokens": 8000,

"temperature": 0,

"topP": 0.5,

"stopSequences": [],

"countPenalty": {"scale": 0 },

"presencePenalty": {"scale": 0 },

"frequencyPenalty": {"scale": 0 }

llm = Bedrock(

credentials_profile_name=os.environ.get("BWB_PROFILE_NAME"), #sets the profile name to use for AWS credentials (if not the default)

region_name=os.environ.get("BWB_REGION_NAME"), #sets the region name (if not the default)

endpoint_url=os.environ.get("BWB_ENDPOINT_URL"), #sets the endpoint URL (if necessary)

model_id="ai21.j2-ultra-v1", #set the foundation model

model_kwargs=model_kwargs) #configure the properties for ClaudeIf you intend to use Anthropic Claude:

model_kwargs = { #AI21

"max_tokens_to_sample": 6000,

"temperature": 0,

"top_p": 0.5,

"top_k": 250,

"stop_sequences": [],

}

llm = Bedrock(

credentials_profile_name=os.environ.get("BWB_PROFILE_NAME"), #sets the profile name to use for AWS credentials (if not the default)

region_name=os.environ.get("BWB_REGION_NAME"), #sets the region name (if not the default)

endpoint_url=os.environ.get("BWB_ENDPOINT_URL"), #sets the endpoint URL (if necessary)

model_id="anthropic.claude-v2", #set the foundation model

model_kwargs=model_kwargs) #configure the properties for ClaudeAs you can see, there are some subtle changes between LLM’s in terms of the model parameters.

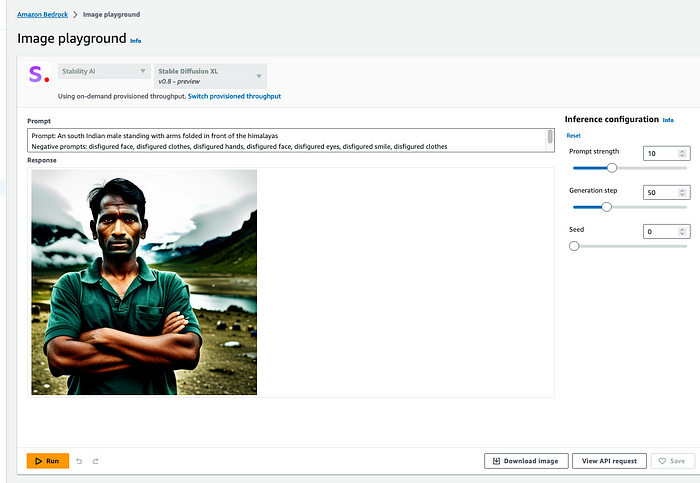

Image generation

Image generation is available on Amazon Bedrock with Stable Diffusion XL, the most advanced text-to-image model from Stability AI. Stable Diffusion XL generates images of high quality in virtually any art style. Some usecases for Image generation include advertising, gaming, metaverse, media and entertainment and more.

In my experiments, prompts play a key role in how the image gets generated. Experiment with them, and get great results !!

For the following prompt, I get an awesome image with Stable Diffusion on Amazon Bedrock:

Prompt: An Indian male standing with arms folded in front of the himalayas

Negative prompts: disfigured face, disfigured clothes, disfigured hands, disfigured face, disfigured eyes, disfigured smile, disfigured clothes

Style preset: digital art

with a slight tweak to the prompts:

Prompt: An south Indian male standing with arms folded in front of the himalayas

Negative prompts: disfigured face, disfigured clothes, disfigured hands, disfigured face, disfigured eyes, disfigured smile, disfigured clothes

Style preset: high resolution color photographythis new image gets generated !!

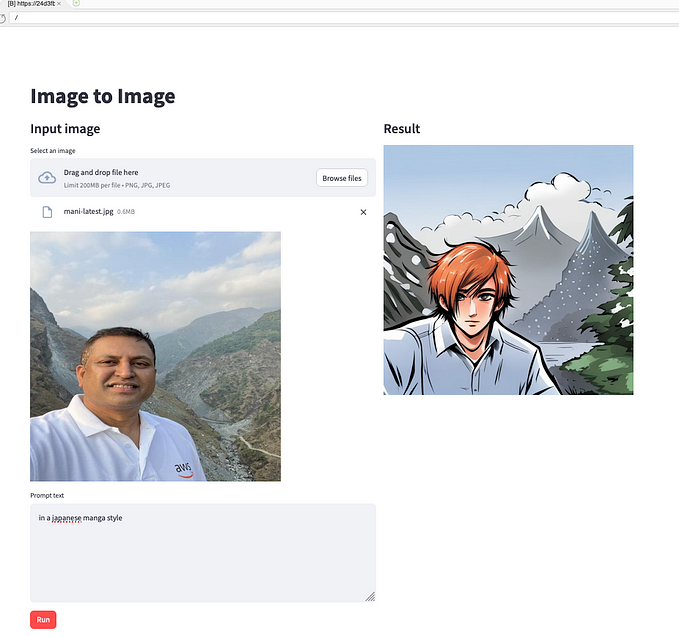

There is also a functionality of an image to image generation using Stable Diffusion, Amazon Bedrock, and Streamlit. This can be tested using the sample application created by one of our amazing teams in the Amazon Bedrock workshop.

Code Generation

Amazon CodeWhisperer, the AI coding companion which is a generative AI tool focused on suggesting code inside your IDE. There are Individual (free) and Professional (paid) tiers.

You can also leverage the Foundational Models on Bedrock for Code Generation, SQL Generation, Code Translation and Explanation with the following sample python notebooks:

Code Generation: Demonstrates how to generate Python code using Natural language. It shows examples of prompting to generate simple functions, classes, and full programs in Python for Data Analyst to perform sales analysis on a given Sales CSV dataset.

Database or SQL Query Generation : Focuses on generating SQL queries with Amazon Bedrock APIs. It includes examples of generating both simple and complex SQL statements for a given data set and database schema.

Code Explanation : Uses Bedrock’s foundation models to generate explanations for complex C++ code snippets. It shows how to carefully craft prompts to get the model to generate comments and documentation that explain the functionality and logic of complicated C++ code examples. Prompts can be easily updated for another programming languages.

Code Translation : Guides you through translating C++ code to Java using Amazon Bedrock and LangChain APIs. It shows techniques for prompting the model to port C++ code over to Java, handling differences in syntax, language constructs, and conventions between the languages.

Thats it folks !! I have barely scratched the surface on what can be achieved with Amazon Bedrock .. As I said, Generative AI is truly a revolutionary piece of technology and its going to affect our lives in so many ways.

Hope this blog was useful. Please note that this is a very rapidly evolving technology, and you need to be keep yourselves updated to be aware of what new things come up every day. I have not covered two more important features in Bedrock:

- Agents for Amazon Bedrock — Certain applications demand an adaptable sequence of calls to language models and various utilities depending on user input. The Agent interface enables such flexibility for these applications. An agent has availability to a range of resources and selects which ones to utilize based on the user input. An example can be Agents for Amazon Bedrock to orchestrate the user-requested task, such as “send a reminder to all policyholders with pending documents,” by breaking it into smaller subtasks like getting claims for a certain time period, identifying paperwork required, and sending reminders. The agent determines the right sequence of tasks and handles any error scenarios along the way.

- Knowledge bases for Bedrock (currently in limited preview)— While we explored RAG above leveraging other AWS services like Kendra, With knowledge bases for Amazon Bedrock, from within the managed service you can connect FMs to your data sources for retrieval augmented generation (RAG), extending the FnamM’s already powerful capabilities and making it more knowledgeable about your specific domain and organization

A personal shameless plug, I am hosting a series of shows on twitch.tv/aws called “India Innovates on AWS”. We have had two great shows, and two more on the calendar. Please do register and spread the word — https://pages.awscloud.com/india-innovates-on-aws-regi.html

I will cover more details on the above two features in later blogs .. Until then, take care and start experimenting and leveraging the power of Generative AI with Amazon Bedrock.

Namaskara 🙏 !!

Workshops/useful resources around Amazon Bedrock:

- Amazon Bedrock documentation

- Deploying a Multi-LLM and Multi-RAG Powered Chatbot Using AWS CDK on AWS

- Amazon Bedrock Workshop — https://catalog.us-east-1.prod.workshops.aws/workshops/a4bdb007-5600-4368-81c5-ff5b4154f518/en-US

- Building with Amazon Bedrock and LangChain — https://catalog.workshops.aws/building-with-amazon-bedrock/en-US

- and much more, checkout the AWS samples in GitHub — https://github.com/aws-samples?q=bedrock&type=all&language=&sort=

- Check out AWS blogs, which has many blogs on Bedrock