Amazon Bedrock is a fully managed serverless service that offers a choice of high-performing FMs from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon. While this should serve the needs for most of the customer usecases, you also have the option of leveraging SageMaker Jumpstart, a Machine learning (ML) hub with foundation models, built-in algorithms, and prebuilt ML solutions that you can deploy with just a few click, which are then provisioned on servers in AWS.

Apart from these two options, if you needed to use other Foundation Models (as a serverless/managed service similar to Bedrock), which are not yet available on Amazon Bedrock, and have been customized in other environments like Amazon SageMaker or available in a model repository like HuggingFace, now you can !! With the general availability (GA) of Amazon Bedrock Custom Model Import a few days back, you can now import and use these customized models alongside existing foundation models (FMs) and by using the same API !!

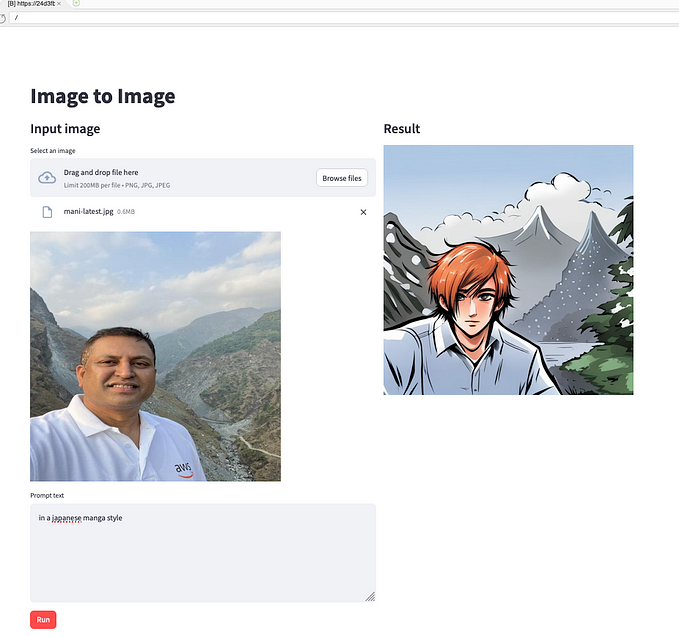

This blog is my quick proof of concept showcasing Amazon Bedrock Custom Model Import feature by importing a couple of Foundation Models from HuggingFace into Amazon S3, and then importing these models into Amazon Bedrock !!

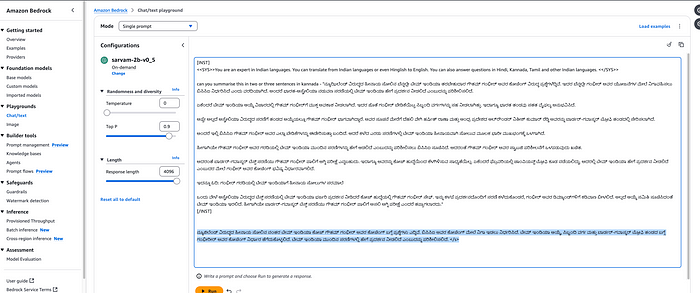

I chose two models hosted on HuggingFace— sarvam-2b-v0.5, a small, yet powerful language model pre-trained from scratch on 2 trillion tokens and trained to be good at 10 Indic languages + English and CodeLlama-7b-Instruct-hf, a model is designed for general code synthesis and understanding.

Let us get started ..

Step 0 — Pre-reqs

There are some pre-requisites before you get started, please consult the documentation. Amazon Bedrock Custom Model Import supports a variety of popular model architectures, including Meta Llama, Mistral, Mixtral, Flan and more.

I chose two models hosted on HuggingFace, sarvam-2b-v0.5, where had some great reviews about its support for Indic languages especially “Hinglish” (where folks use a mix of Hindi and English !!) and CodeLlama, state-of-the-art LLM capable of generating code, and natural language about code, from both code and natural language prompts, and both these models are on top of Meta AI’s, Llama architecture. I also deliberately chose models, which are smaller in size, as I wanted to do a quick proof of concept and did not want to spend a lot of time in downloading from HuggingFace and uploading the model files to S3.

Step 1 — Downloading the model files and uploading to Amazon S3

The files are usually large and several GiBs, you can either use git clone or use some other tools like huggingface’s hf_transfer, check out https://huggingface.co/docs/hub/en/models-downloading for more guidance. Alternatively, if you created the model in Amazon SageMaker, you can also specify the SageMaker model.

I used the following commands to download the model files and upload them to an Amazon S3 bucket for both Sarvam and CodeLlama.

git clone https://huggingface.co/sarvamai/sarvam-2b-v0.5

aws s3 cp sarvam-2b-v0.5/ s3://mani-s3-<<mybucket>>/sarvam-2b-v0.5/ --recursiveThe S3 bucket directory looks something similar to this:

Tip: The HuggingFace repo has a .git directory, I don’t think they are needed for Bedrock Model import. Just consult documentation on the required files for Custom Model Import. You can save a lot of time during download/upload if you chose to ignore this directory !!

Step 2 — Import Model into Bedrock

The next few steps are fairly easy, and Amazon Bedrock has made it very easy. Once the model is uploaded to an S3 bucket or via SageMaker, you can “Import the Model”, which kicks off a job, and it took around 10–13 minutes for me, and voila, the Model was available for inferencing.

Step 3— Testing with sample prompts on sarvam and codellama

With Sarvam, I tested with a few prompts in Hinglish (a question in Hindi written in English), Kannada (summarizing a news article about the drubbing the Indian cricket team is receiving down under!!) and Hindi.

I tried a few coding related tasks with CodeLlama — coding, debugging ..

My prompts were in this format:

[INST]

<<SYS>>You are an expert in Indian languages. You can translate the sentence from Indian languages or even Hinglish to English. You can also answer questions in Hindi, Kannada, Tamil and other Indian languages. <</SYS>>

Translate this sentence to english - "Mera order ka status kya hai? Maine 15th october ko order place kya tha apke website me"

[/INST][INST]

<<SYS>>You are an expert programmer that helps to review Python code for bugs. <</SYS>>

<<user>>This function should return a list of lambda functions that compute successive powers of their input, but it doesn’t work:

def power_funcs(max_pow):

return [lambda x:x**k for k in range(1, max_pow+1)]

the function should be such that [h(2) for f in powers(3)] should give [2, 4, 8], but it currently gives [8,8,8]. What is happening here?<</user>>

[/INST]Final thoughts

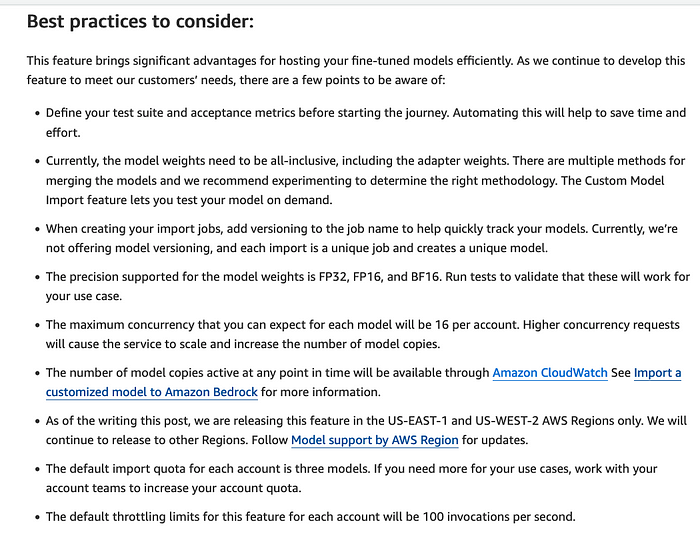

My first preference would be to use the wide range of Foundation Models that are available in Amazon Bedrock, while the Custom Model Import should be an option, if I need to use an specialized FM which is not yet available on Bedrock. While this was a quick proof of concept, you should also consider the following aspects mentioned in the AWS blog before moving to production:

Custom Model Pricing:

Please refer to https://aws.amazon.com/bedrock/pricing/ for the latest pricing:

Hope this blog was useful. Please do share your feedback via this blog or connect with me on LinkedIn 🙏

Resources:

- AWS blog — Amazon Bedrock Custom Model Import

- AWS Documentation — https://docs.aws.amazon.com/bedrock/latest/userguide/model-customization-import-model.html

- Sarvam on HuggingFace— https://huggingface.co/sarvamai/sarvam-2b-v0.5

- CodeLlama on HuggingFace— https://huggingface.co/codellama/CodeLlama-7b-Instruct-hf

- CodeLlama — https://ai.meta.com/blog/code-llama-large-language-model-coding/

- Sarvam — https://www.sarvam.ai/blogs/sarvam-1